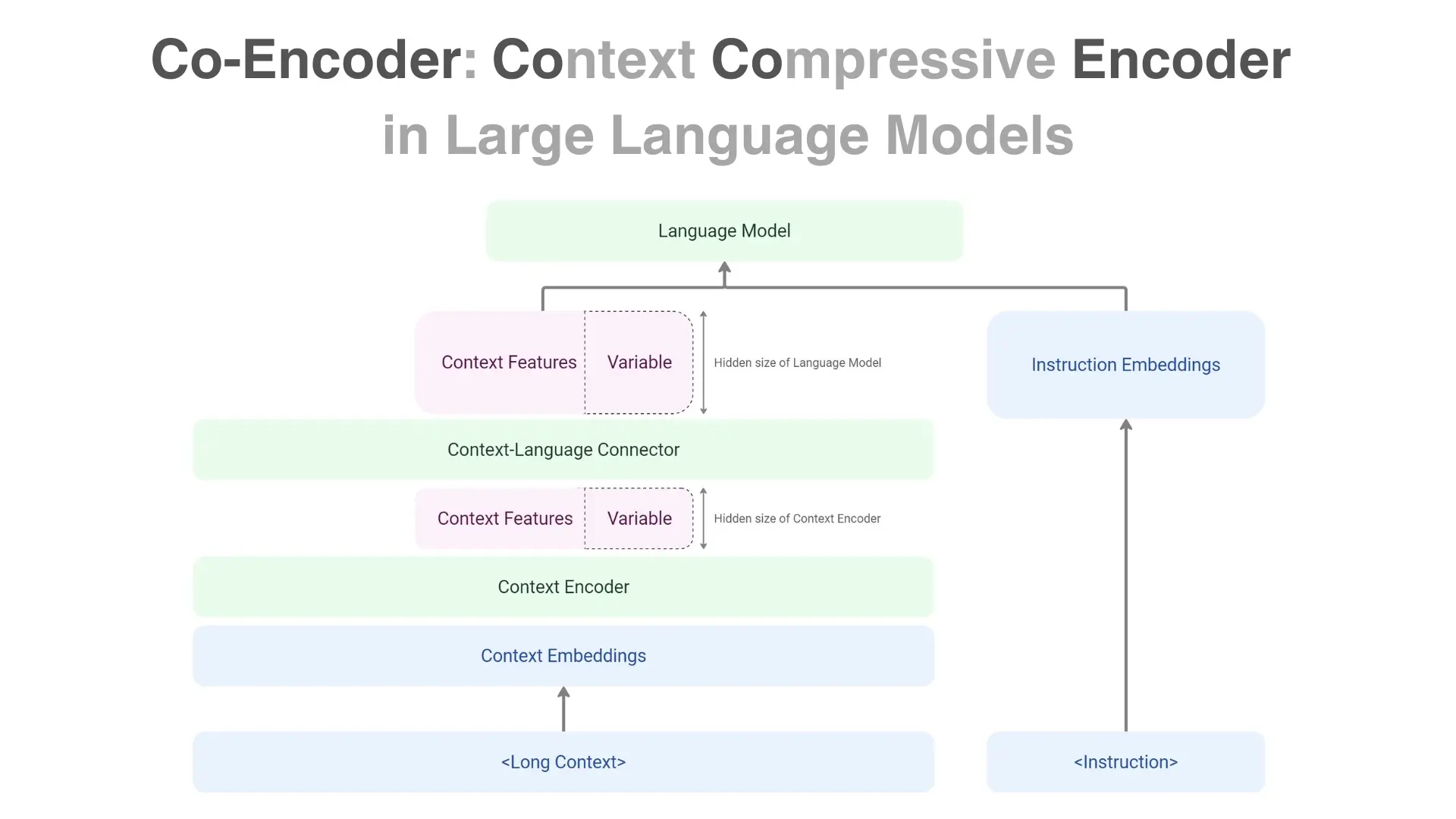

In recent years, the technology of generative AI has advanced significantly, enabling large language models (LLMs) like ChatGPT to generate natural text. However, these models face the challenge of high inference costs. Particularly when processing long texts, they require substantial memory and high-performance GPUs, making inference difficult with standard GPUs. To address this issue, we propose a Co-Encoder that compresses input converted into matrices and directly feeds it to the LLM, enabling low-cost inference of long texts.

Creator

Rakuto Suda (Year: 2024 / Mentor: Hirokazu Nishio)

Pitch

To show English subtitles:

Play video →↓

Click CC icon →↓

Click Settings →↓

Click Subtitles/CC →↓

Click Auto-translate →↓

Click English (or other language you prefer)

Play video →↓

Click CC icon →↓

Click Settings →↓

Click Subtitles/CC →↓

Click Auto-translate →↓

Click English (or other language you prefer)